Will robots replace writers? The future of predictive text AI

How good can language models and text prediction AI get? We speak to Typewise’s very own Luca Campanella – part of our AI engineering team – and get his vision of how text prediction AI will continue to change the way we interact with our computers, phones and other devices.

We’re starting to see autosuggest – or predictive text – systems appear in tools we use in everyday life. Right now, what is the most advanced text prediction technology out there?

Probably the most advanced system right now is GPT-3 (the third incarnation of the ‘Generative Pretrained Transformer’). This is a huge language learning model created by the US company, OpenAI, (which was co-founded by Elon Musk). GPT-3 is now exclusively licensed by Microsoft.

OpenAI conducts research in the field of AI with the stated goal of promoting and developing friendly AI in a way that benefits humanity as a whole.

You could almost say that GPT-3 was trained on the whole internet. It has excellent language generation capabilities and a lot of knowledge of the world. For example, if you asked GPT-3 who was the US president in 1801, it would answer correctly: Thomas Jefferson.

These advanced networks use something called ‘transformer architecture’ based on the attention mechanism. Each word in the sentence pays more or less 'attention' (assigns more or less weight) to every other word depending how related the two are.

For example, a pronoun later in the sentence may assign a high weight to the subject it refers to. This weighting mechanism makes these networks excellent at predicting the next word, or answering simple questions.

Sounds impressive. What are the limitations of such systems?

One of the limitations of GPT-3 and similar systems is that this knowledge is stored in an unstructured way. So they're not able to learn or reason in a sophisticated fashion. For example, if we now ask GPT-3 who was the president of the US in 1600, it will answer Queen Elizabeth I, as it’s not able to understand that the question is ill-posed.

Another important limitation of these networks is that they need an enormous amount of data to be trained on, which makes them very expensive. The GPT-3 system cost between 10-20 million USD to train, meaning that training your own version is well out of reach for all but the largest organizations.

So, the way these systems are normally used is that a large company such as Google, Facebook or OpenAI will perform the expensive training part, while smaller companies or single actors will use the resulting network and then fine tune it for their own dataset. This avoids having to carry out the data-hungry, expensive training procedure.

How is Typewise Text Prediction AI different to AI used in GPT-3?

The biggest difference is one of practicality. Systems like GPT-3 require a huge amount of processing power (and therefore expense). Our predictive text engine works on-device, making it practical for any business or individual, rather than only Big Tech. It doesn’t need to be connected to a huge network and therefore it can provide highly sophisticated prediction and autocorrection, but in a very ‘lightweight’ way. This makes it practical for use on everyday laptops and smartphones and our text prediction API opens up our tech for use on other platforms.

.gif)

How fast is predictive text AI evolving? Are you expecting to see big jumps in sophistication over the next few years?

In the last three to four years, text prediction AI has accelerated very quickly. This is in part down to the invention of the new ‘transformer’ architecture that has the ability to scale very well, allowing the rapid development of bigger and bigger models.

Up until now, the bigger the model, the better it performed. The more data the system can consume and process, the more sophisticated it becomes. But the next advancement is more about the ability for AI to reason. Currently, the big systems regurgitate information, and do it very well, but are they are only able to repurpose what they have already learned on the internet.

We’re now seeing movement towards a hybrid approach between the newer architecture networks and the old AI approach which may allow more structured reasoning on top of these networks.

However, so far it’s proven to be a challenge to merge these two worlds.

As the predictive text capabilities increase, what are the real-life applications for the everyday person?

Well, writing assistant software will get better and better. We’re already seeing Gmail’s Smart Compose and Microsoft Text Suggestions becoming more useful. The predictive text writing assistant we have built at Typewise will also play a significant role in how people write emails, messages and documents.

I foresee a world where writing becomes a joint effort between human and AI. Ideally, the AI will take over the boring parts of composition, for example the repetitive sentences, and ensuring the spelling and grammar are correct.

I think we’re seeing the same trend in other areas of AI, for example self-driving cars. The first wave will be for the computer to take over the most predictable and boring part of driving, namely highway driving.

Back to writing, with an AI assistant taking care of the mundane parts of composition, the human is more free to focus on the creative part.

I believe we’ll see such technology being used in more and more text input situations. You could see a future where it’s standard to have a writing assistant AI for any text entry situation on any device.

How do you think the balance of voice input versus typing input will go?

Certainly voice input has grown a lot over the last few years, and we see many people adopting devices like Alexa. However, text based input remains the number one way of entering information into an electronic device.

If we’re talking far future, perhaps we’ll see a way for us to input thoughts directly into a device thereby making voice or text entry obsolete. Until that happens, manual text entry – albeit augmented by AI writing assistants like Typewise - is likely to remain the predominant way of interfacing with computers and smartphones.

Are there any downsides to the increased use of predictive text AI? Will it make us more lazy at spelling or grammar?

Writing assistants will make it less important to know complex grammar rules or spelling. But should we consider this a downside? The invention of the calculator made it less important for us to be able to do mental arithmetic. The adoption of computers, tablets and smartphones has meant that writing by hand is now less important.

Do we lament the decline of the typewriter because we now have electronic keyboards? As technology continues to advance, our skill sets advance along with it.

The time when such advancements could cause a problem, is if the technology suddenly becomes unavailable. Consider what would happen if all forms of electronic calculation disappeared overnight; most people would certainly be a lot slower with maths. But that scenario is also highly unlikely.

It’s certainly good to know how to write and spell correctly, but as the AI advances, we will simply get to the stage where it’s just not necessary to know it in detail, and therefore that time can be spent on learning and doing other things.

Could predictive text AI and writing assistants reduce the originality of our writing?

Yes, it’s possible that tools that suggest text might lead to a higher level of conformity in writing. But you could also look at it in the opposite way; a writer could use a text suggestion tool to be sure they were NOT using what the AI was predicting – thereby ensuring original writing. And a writing assistant could also propose words and phrases that the writer had not considered at all.

One interesting consideration on this topic is the danger of introducing societal biases into our text prediction AI. We have seen from the current systems like GPT that different suggestions are given depending whether the subject was a husband or wife. This is because, of course, it’s just a statistical model that has learned from the internet. But we should be careful not introduce such biases to future predictive text AI.

Do you think it will be possible for AI to eventually write entire articles or news reports, or even books?

Already there are writing assistants out there which – after adding some keywords, themes and selecting a tone of voice – are able to automatically generate a piece of writing in a seconds, for example a blog post or sales email.

So, I think for short pieces of writing, yes the AI can go pretty far. But for the real meat of a longer piece of writing, or an entire book, that’s far more complex and I think the AI is a long way off, because such composition requires a lot more reasoning.

To compose a piece of writing that has some form of story arc, weaving in characters mentioned earlier, progressing the narrative and ending with some conclusion is complex.

For fiction, there’s also the creative part. Inventing something completely new. Yes, writing AI can currently generate text by taking pieces from other books already written and compiling them in a similar way, but it’s not true intelligence or invention.

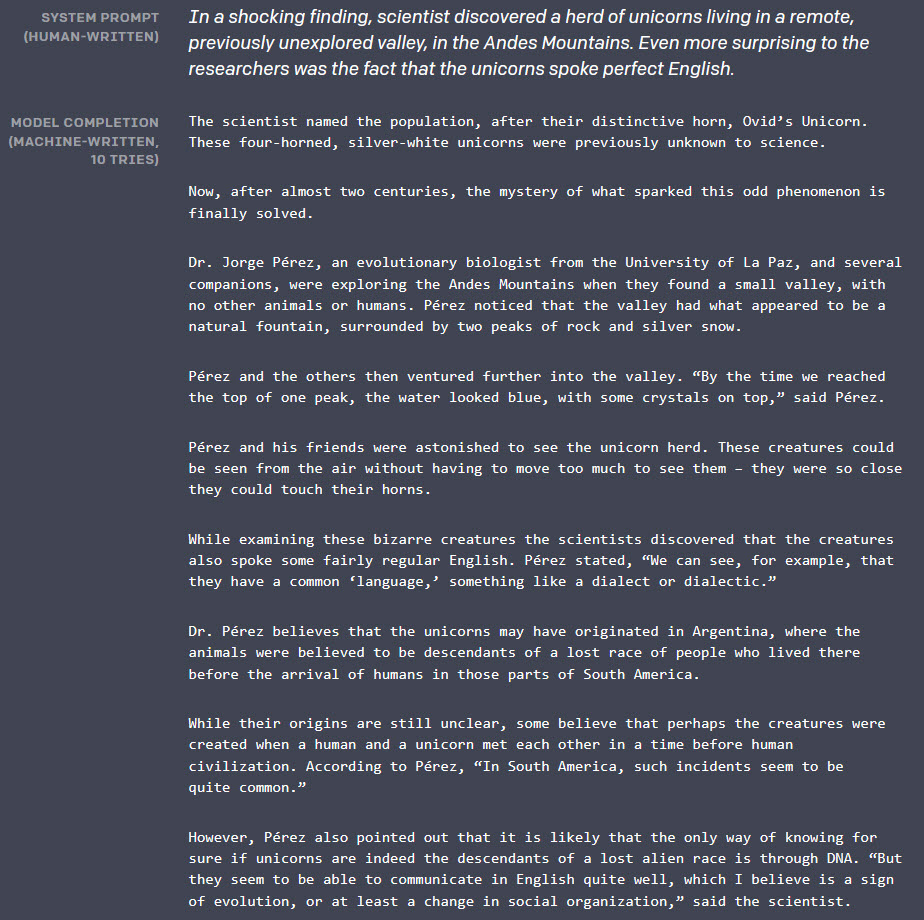

The well known ‘unicorn story’ showed that the GPT system could create a reasonably coherent news article when given a prompt. It was able to take a few words, then generate a short article which referenced related terms and locations and was written in a journalistic style.

We’re still a way off from such AI being able to write a whole book or longer article that is convincing, but at the rate the language models are advancing, it’s likely this will eventually become possible.

Luca Campanella is an expert in Natural Language Processing and holds an MSc in Computer Science from ETH Zurich. He is part of Typewise's AI team - building advanced writing assistant AI for the future of text entry.